Master the content and be ready for exam day success quickly with this . We guarantee it!We make it a reality and give you real in our Microsoft 70-765 braindumps. Latest 100% VALID at below page. You can use our Microsoft 70-765 braindumps and pass your exam.

Online Microsoft 70-765 free dumps demo Below:

NEW QUESTION 1

You deploy a new Microsoft Azure SQL database instance to support a variety of mobile application and public websites. You configure geo-replication with regions in Brazil and Japan.

You need to implement real-time encryption of the database and all backups.

Solution: You password protect all azure SQL backups and enable azure active directory authentication for all azure SQL server instances.

Does the solution meet the goal?

Answer: B

Explanation: Password protection does not encrypt the data.

Transparent Data Encryption (TDE) would provide a solution. References:

https://azure.microsoft.com/en-us/blog/how-to-configure-azure-sql-database-geo-dr-with-azure-key-vault/

NEW QUESTION 2

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this sections, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are tuning the performance of a virtual machines that hosts a Microsoft SQL Server instance. The virtual machine originally had four CPU cores and now has 32 CPU cores.

The SQL Server instance uses the default settings and has an OLTP database named db1. The largest table in db1 is a key value store table named table1.

Several reports use the PIVOT statement and access more than 100 million rows in table1.

You discover that when the reports run, there are PAGELATCH_IO waits on PFS pages 2:1:1, 2:2:1, 2:3:1, and 2:4:1 within the tempdb database.

You need to prevent the PAGELATCH_IO waits from occurring. Solution: You add more files to db1.

Does this meet the goal?

Answer: A

Explanation: From SQL Server’s perspective, you can measure the I/O latency from sys.dm_os_wait_stats. If you consistently see high waiting for PAGELATCH_IO, you can benefit from a faster I/O subsystem for SQL Server.

A cause can be poor design of your database - you may wish to split out data located on 'hot pages', which are accessed frequently and which you might identify as the causes of your latch contention. For example, if you have a currency table with a data page containing 100 rows, of which 1 is updated per transaction and you have a transaction rate of 200/sec, you could see page latch queues of 100 or more. If each page latch wait costs just 5ms before clearing, this represents a full half-second delay for each update. In this case, splitting out the currency rows into different tables might prove more performant (if less normalized and logically structured).

References: https://www.mssqltips.com/sqlservertip/3088/Explanation:-of-sql-server-io-and-latches/

NEW QUESTION 3

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets stated goals.

You have a mission-critical application that stores data in a Microsoft SQL Server instance. The application runs several financial reports. The reports use a SQL Server-authenticated login named Reporting_User. All queries that write data to the database use Windows authentication.

Users report that the queries used to provide data for the financial reports take a long time to complete. The queries consume the majority of CPU and memory resources on the database server. As a result, read-write queries for the application also take a long time to complete.

You need to improve performance of the application while still allowing the report queries to finish.

Solution: You configure the Resource Governor to limit the amount of memory, CPU, and IOPS used for the pool of all queries that the Reporting_user login can run concurrently.

Does the solution meet the goal?

Answer: A

Explanation: SQL Server Resource Governor is a feature than you can use to manage SQL Server

workload and system resource consumption. Resource Governor enables you to specify limits on the amount of CPU, physical IO, and memory that incoming application requests can use.

References:https://msdn.microsoft.com/en-us/library/bb933866.aspx

NEW QUESTION 4

You have Microsoft SQL Server on a Microsoft Azure virtual machine that has a database named DB1. You discover that DB1 experiences WRITE_LOG waits that are longer than 50 ms.

You need to reduce the WRITE_LOG wait time. Solution: Add additional log files to DB1.

Does this meet the goal?

Answer: B

Explanation: This problem is related to the disk response time, not to the number of log files.

References:

https://www.mssqltips.com/sqlservertip/4131/troubleshooting-sql-server-transaction-log-related-wait-types/

NEW QUESTION 5

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets stated goals.

You manage a Microsoft SQL Server environment with several databases.

You need to ensure that queries use statistical data and do not initialize values for local variables.

Solution: You enable the QUERY_OPTIMIZER_HOTFIXES option for the databases. Does the solution meet the goal?

Answer: B

Explanation: QUERY_OPTIMIZER_HOTFIXES = { ON | OFF | PRIMARY } enables or disables query optimization hotfixes regardless of the compatibility level of the database. This is equivalent to Trace Flag 4199.

References:https://msdn.microsoft.com/en-us/library/mt629158.aspx

NEW QUESTION 6

You administer a Microsoft SQL Server 2014 database that includes a table named Application.Events. Application.Events contains millions of records about user activity in an application.

Records in Application.Events that are more than 90 days old are purged nightly. When records are purged, table locks are causing contention with inserts.

You need to be able to modify Application.Events without requiring any changes to the applications that utilize Application.Events.

Which type of solution should you use?

Answer: A

Explanation: Partitioning large tables or indexes can have manageability and performance benefits including:

You can perform maintenance operations on one or more partitions more quickly. The operations are more efficient because they target only these data subsets, instead of the whole table.

References: https://docs.microsoft.com/en-us/sql/relational-databases/partitions/partitioned-tables-and-indexes

NEW QUESTION 7

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You have deployed several GS-series virtual machines (VMs) in Microsoft Azure. You plan to deploy Microsoft SQL Server in a development environment. Each VM has a dedicated disk for backups.

You need to backup a database to the local disk on a VM. The backup must be replicated to another region.

Which storage option should you use?

Answer: E

Explanation: Note: SQL Database automatically creates a database backups and uses Azure read- access geo-redundant storage (RA-GRS) to provide geo-redundancy. These backups are created automatically and at no additional charge. You don't need to do anything to make them happen. Database backups are an essential part of any business continuity and disaster recovery strategy because they protect your data from accidental corruption or deletion.

References:https://docs.microsoft.com/en-us/azure/sql-database/sql-database-automated- backups

NEW QUESTION 8

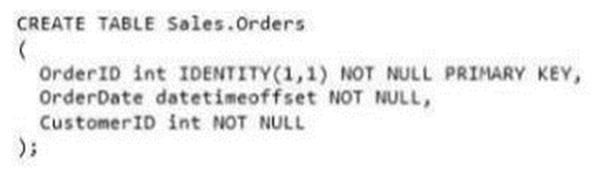

You are designing a Windows Azure SQL Database for an order fulfillment system. You create a table named Sales.Orders with the following script.

Each order is tracked by using one of the following statuses:

Fulfilled

Shipped

Ordered

Received

You need to design the database to ensure that that you can retrieve the following information:

The current status of an order

The previous status of an order.

The date when the status changed.

The solution must minimize storage.

More than one answer choice may achieve the goal. Select the BEST answer.

Answer: A

Explanation: This stores only the minimal information required.

NEW QUESTION 9

You administer a Microsoft SQL Server 2014 database. You want to make a full backup of the database to a file on disk.

In doing so, you need to output the progress of the backup. Which backup option should you use?

Answer: A

Explanation: STATS is a monitoring option of the BACKUP command. STATS [ =percentage ]

Displays a message each time another percentage completes, and is used to gauge progress. If percentage is omitted, SQL Server displays a message after each 10 percent is completed.

The STATS option reports the percentage complete as of the threshold for reporting the next interval. This is at approximately the specified percentage; for example, with STATS=10, if the amount completed is 40 percent, the option might display 43 percent. For large backup sets, this is not a problem, because the percentage complete moves very slowly between completed I/O calls.

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/backup-transact-sql

NEW QUESTION 10

You administer a Microsoft SQL Server 2014 database.

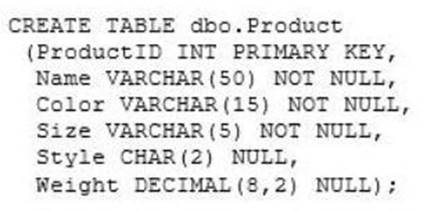

The database contains a Product table created by using the following definition:

You need to ensure that the minimum amount of disk space is used to store the data in the Product table. What should you do?

Answer: D

NEW QUESTION 11

You administer a Microsoft SQL Server 2014 instance that contains a financial database hosted on a storage area network (SAN).

The financial database has the following characteristics:

The database is continually modified by users during business hours from Monday through Friday between 09:00 hours and 17:00 hours. Five percent of the existing data is modified each day.

The Finance department loads large CSV files into a number of tables each business day at 11:15 hours and 15:15 hours by using the BCP or BULK INSERT commands. Each data load adds 3 GB of data to the database.

These data load operations must occur in the minimum amount of time.

A full database backup is performed every Sunday at 10:00 hours. Backup operations will be performed every two hours (11:00, 13:00, 15:00, and 17:00) during business hours.

On Wednesday at 10:00 hours, the development team requests you to refresh the database on a development server by using the most recent version.

You need to perform a full database backup that will be restored on the development server. Which backup option should you use?

Answer: L

Explanation: COPY_ONLY specifies that the backup is a copy-only backup, which does not affect the normal sequence of backups. A copy-only backup is created independently of your regularly scheduled, conventional backups. A copy-only backup does not affect your overall backup and restore procedures for the database.

References:

https://docs.microsoft.com/en-us/sql/t-sql/statements/backup-transact-sql

NEW QUESTION 12

You create a new Microsoft Azure subscription.

You need to create a group of Azure SQL databases that share resources. Which cmdlet should you run first?

Answer: D

Explanation: SQL Database elastic pools are a simple, cost-effective solution for managing and scaling multiple databases that have varying and unpredictable usage demands. The databases in an elastic pool are on a single Azure SQL Database server and share a set number of resources (elastic Database Transaction Units (eDTUs)) at a set price. Elastic pools in Azure SQL Database enable SaaS developers to optimize the price performance for a group of databases within a prescribed budget while delivering performance elasticity for each database.

References: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

NEW QUESTION 13

You have an on-premises Microsoft SQL Server named Server1.

You provision a Microsoft Azure SQL Database server named Server2. On Server1, you create a database named DB1.

You need to enable the Stretch Database feature for DB1.

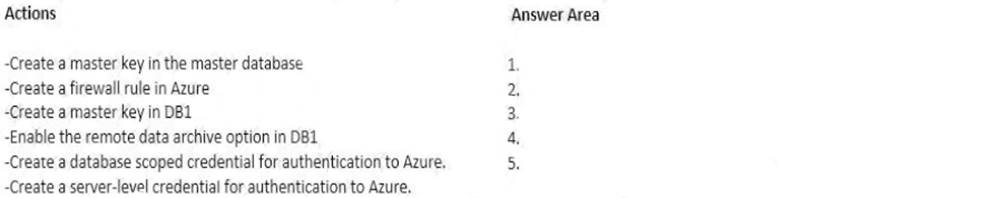

Which five actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

Explanation: Step 1: Enable the remote data archive option in DB1 Prerequisite: Enable Stretch Database on the server

Before you can enable Stretch Database on a database or a table, you have to enable it on the local server. To enable Stretch Database on the server manually, run sp_configure and turn on the remote data archive option.

Step 2: Create a firewall rule in Azure

On the Azure server, create a firewall rule with the IP address range of the SQL Server that lets SQL Server communicate with the remote server.

Step 3: Create a master key in the master database

To configure a SQL Server database for Stretch Database, the database has to have a database master key. The database master key secures the credentials that Stretch Database uses to connect to the remote database.

Step 4: Create a database scoped credential for authentication to Azure

When you configure a database for Stretch Database, you have to provide a credential for Stretch Database to use for communication between the on premises SQL Server and the remote Azure server. You have two options.

Step 5: Create a server-level credential for authentication to Azure.

To configure a database for Stretch Database, run the ALTER DATABASE command. For the SERVER argument, provide the name of an existing Azure server, including the

.d atabase.windows.net portion of the name - for example, MyStretchDatabaseServer.database.windows.net.

Provide an existing administrator credential with the CREDENTIAL argument, or specify FEDERATED_SERVICE_ACCOUNT = ON. The following example provides an existing credential.

ALTER DATABASE <database name> SET REMOTE_DATA_ARCHIVE = ON (

SERVER = '<server_name>' ,

CREDENTIAL = <db_scoped_credential_name>

) ; GO

References:

https://docs.microsoft.com/en-us/sql/sql-server/stretch-database/enable-stretch-database-for-a-database?view=sq

NEW QUESTION 14

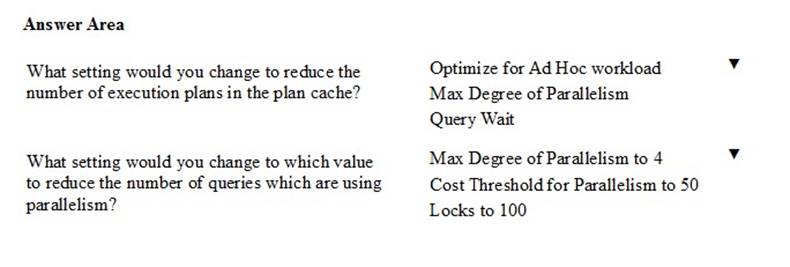

HOTSPOT

You need to resolve the identified issues.

Use the drop-down menus to select the answer choice that answers each question based on the information presented in the graphic.

Answer:

Explanation: From exhibit we see:

Cost Threshold of Parallelism: 5 Optimize for Ad Hoc Workloads: false

Max Degree of Parallelism: 0 (This is the default setting, which enables the server to determine the maximum degree of parallelism. It is fine.)

Locks: 0

Query Wait: -1

Box 1: Optimize for Ad Hoc Workload

Change the Optimize for Ad Hoc Workload setting from false to 1/True.

The optimize for ad hoc workloads option is used to improve the efficiency of the plan cache for workloads that contain many single use ad hoc batches. When this option is set to 1, the Database Engine stores a small compiled plan stub in the plan cache when a batch is compiled for the first time, instead of the full compiled plan. This helps to relieve memory pressure by not allowing the plan cache to become filled with compiled plans that are not reused.

NEW QUESTION 15

You administer a Microsoft SQL Server 2014.

A process that normally runs in less than 10 seconds has been running for more than an hour. You examine the application log and discover that the process is using session ID 60.

You need to find out whether the process is being blocked. Which Transact-SQL statement should you use?

Answer: A

Explanation: sp_who provides information about current users, sessions, and processes in an instance of the Microsoft SQL Server Database Engine. The information can be filtered to return only those processes that are not idle, that belong to a specific user, or that belong to a specific session.

Example: Displaying a specific process identified by a session ID EXEC sp_who '10' --specifies the process_id;

References:https://docs.microsoft.com/en-us/sql/relational-databases/system-stored-procedures/sp-who-transact-

NEW QUESTION 16

You administer a Microsoft SQL Server 2014 database.

You need to ensure that the size of the transaction log file does not exceed 2 GB. What should you do?

Answer: B

Explanation: You can use the ALTER DATABASE (Transact-SQL) statement to manage the growth of a transaction log file

To control the maximum the size of a log file in KB, MB, GB, and TB units or to set growth to UNLIMITED, use the MAXSIZE option. However, there is no SET LOGFILE subcommand.

References: https://technet.microsoft.com/en-us/library/ms365418(v=sql.110).aspx#ControlGrowth

NEW QUESTION 17

HOTSPOT

You need to optimize SRV1.

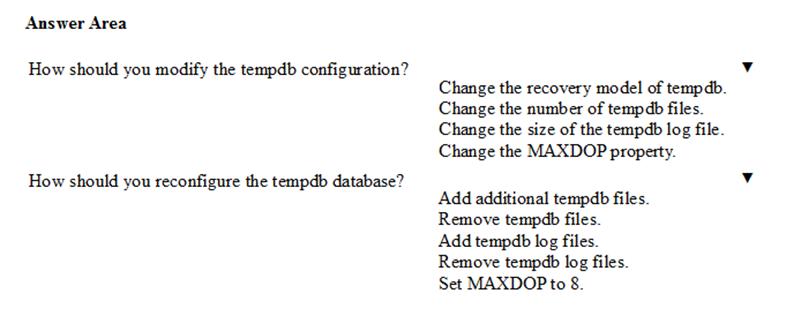

What configuration changes should you implement? To answer, select the appropriate option from each list in the answer area.

Answer:

Explanation: From the scenario: SRV1 has 16 logical cores and hosts a SQL Server instance that supports a mission-critical application. The application hasapproximately 30,000 concurrent users and relies heavily on the use of temporary tables.

Box 1: Change the size of the tempdb log file.

The size and physical placement of the tempdb database can affect the performance of a system. For example, if the size that is defined for tempdb is too small, part of the system- processing load may be taken up with autogrowing tempdb to the size required to support the workload every time you restart the instance of SQL Server. You can avoid this overhead by increasing the sizes of the tempdb data and log file.

Box 2: Add additional tempdb files.

Create as many files as needed to maximize disk bandwidth. Using multiple files reduces tempdb storage contention and yields significantly better scalability. However, do not create too many files because this can reduce performance and increase management overhead. As a general guideline, create one data file for each CPU on the server (accounting for any affinity mask settings) and then adjust the number of files up or down as necessary.

Topic 5, Contoso, Ltd Case Study 2Background

You are the database administrator for Contoso, Ltd. The company has 200 offices around the world. The company has corporate executives that are located in offices in London, New York, Toronto, Sydney, and Tokyo.

Contoso, Ltd. has a Microsoft Azure SQL Database environment. You plan to deploy a new

Azure SQL Database to support a variety of mobile applications and public websites.

The company is deploying a multi-tenant environment. The environment will host Azure SQL Database instances. The company plans to make the instances available to internal departments and partner companies. Contoso is in the final stages of setting up networking and communications for the environment.

Existing Contoso and Customer instances need to be migrated to Azure virtual machines (VM) according to the following requirements:

The company plans to deploy a new order entry application and a new business intelligence and analysis application. Each application will be supported by a new database. Contoso creates a new Azure SQL database named Reporting. The database will be used to support the company's financial reporting requirements. You associate the database with the Contoso Azure Active Directory domain.

Each location database for the data entry application may have an unpredictable amount of activity. Data must be replicated to secondary databases in Azure datacenters in different regions.

To support the application, you need to create a database named contosodb1 in the existing environment.

Objects

Database

The contosodb1 database must support the following requirements:

Application

For the business intelligence application, corporate executives must be able to view all data in near real-time with low network latency.

Contoso has the following security, networking, and communications requirements:

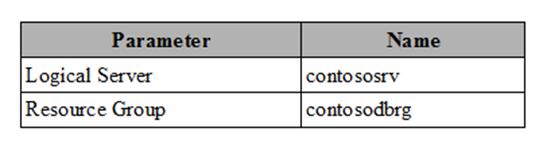

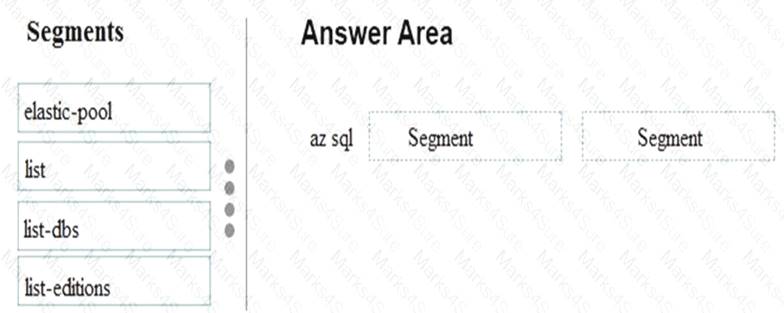

NEW QUESTION 18

Your company has several Microsoft Azure SQL Database instances used within an elastic pool. You need to obtain a list of databases in the pool.

How should you complete the commands? To answer, drag the appropriate segments to the correct targets. Each segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation: References:

https://docs.microsoft.com/en-us/cli/azure/sql/elastic-pool?view=azure-cli-latest#az-sql-elastic-pool-list-dbs

NEW QUESTION 19

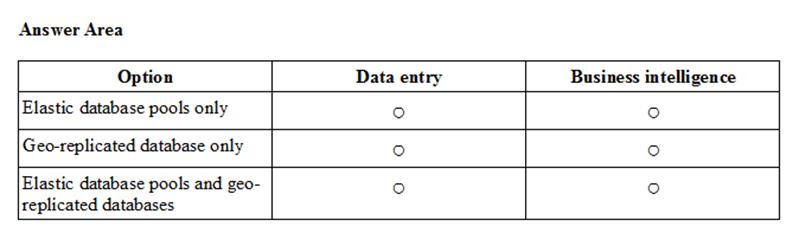

HOTSPOT

You need to configure the data entry and business intelligence databases. In the table below, identify the option that you must use for each database. NOTE: Make only one selection in each column.

Answer:

Explanation: Data Entry: Geo-replicated database only

From Contoso scenario: Each location database for the data entry application may have an unpredictable amount of activity. Data must be replicated to secondary databases in Azure datacenters in different regions.

Business intelligence: Elastic database pools only

From Contoso scenario: For the business intelligence application, corporate executives must be able to view all data in near real-time with low network latency.

SQL DB elastic pools provide a simple cost effective solution to manage the performance goals for multiple databases that have widely varying and unpredictable usage patterns.

References:https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool

Topic 6, SQL Server ReportingBackground

You manage a Microsoft SQL Server environment that includes the following databases: DB1, DB2, Reporting.

The environment also includes SQL Reporting Services (SSRS) and SQL Server Analysis Services (SSAS). All SSRS and SSAS servers use named instances. You configure a firewall rule for SSAS.

Databases Database Name:

DB1

Notes:

This database was migrated from SQL Server 2012 to SQL Server 2021. Thousands of records are inserted into DB1 or updated each second. Inserts are made by many different external applications that your company's developers do not control. You observe that transaction log write latency is a bottleneck in performance. Because of the transient nature of all the data in this database, the business can tolerate some data loss in the event of a server shutdown.

Database Name: DB2

Notes:

This database was migrated from SQL Server 2012 to SQL Server 2021. Thousands of records are updated or inserted per second. You observe that the WRITELOG wait type is the highest aggregated wait type. Most writes must have no tolerance for data loss in the event of a server shutdown. The business has identified certain write queries where data loss is tolerable in the event of a server shutdown.

Database Name: Reporting

Notes:

You create a SQL Server-authenticated login named BIAppUser on the SQL Server instance to support users of the Reporting database. The BIAppUser login is not a member of the sysadmin role.

You plan to configure performance-monitoring alerts for this instance by using SQL Agent Alerts.

P.S. Easily pass 70-765 Exam with 209 Q&As Simply pass Dumps & pdf Version, Welcome to Download the Newest Simply pass 70-765 Dumps: https://www.simply-pass.com/Microsoft-exam/70-765-dumps.html (209 New Questions)