Act now and download your 70-776 Exam Dumps today! Do not waste time for the worthless 70-776 Exam Questions tutorials. Download 70-776 Braindumps with real questions and answers and begin to learn 70-776 Exam Questions and Answers with a classic professional.

Free demo questions for Microsoft 70-776 Exam Dumps Below:

NEW QUESTION 1

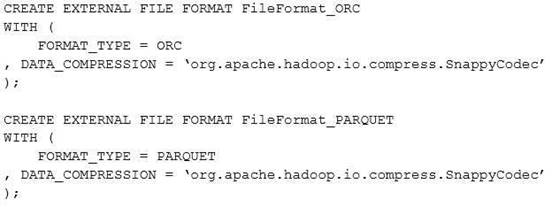

You have a Microsoft Azure SQL data warehouse. The following statements are used to define file formats in the data warehouse.

You have an external PolyBase table named file_factPowerMeasurement that uses the FileFormat_ORC file format.

You need to change file_ factPowerMeasurement to use the FileFormat_PARQUET file format. Which two statements should you execute? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer: AE

NEW QUESTION 2

You need to define an input dataset for a Microsoft Azure Data Factory pipeline.

Which properties should you include when you define the dataset?

Answer: A

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/v1/data-factory-create-datasets

NEW QUESTION 3

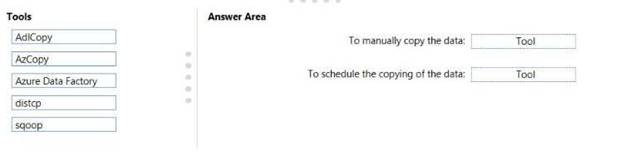

DRAG DROP

You are designing a Microsoft Azure analytics solution. The solution requires that data be copied from Azure Blob storage to Azure Data Lake Store.

The data will be copied on a recurring schedule. Occasionally, the data will be copied manually. You need to recommend a solution to copy the data.

Which tools should you include in the recommendation? To answer, drag the appropriate tools to the correct requirements. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 4

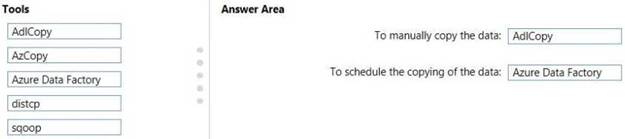

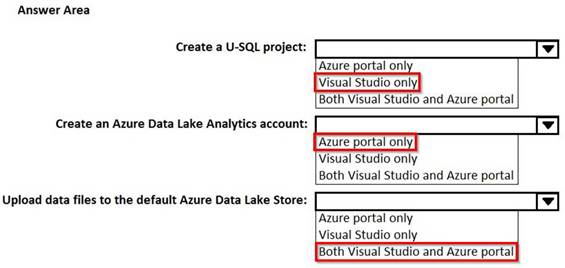

HOTSPOT

You use Microsoft Visual Studio to develop custom solutions for customers who use Microsoft Azure Data Lake Analytics.

You install the Data Lake Tools for Visual Studio.

You need to identify which tasks can be performed from Visual Studio and which tasks can be performed from the Azure portal.

What should you identify for each task? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 5

You plan to create several U-SQLjobs.

You need to store structured data and code that can be shared by the U-SQL jobs. What should you use?

Answer: C

NEW QUESTION 6

You plan to deploy a Microsoft Azure virtual machine that will a host data warehouse. The data warehouse will contain a 10-TB database.

You need to provide the fastest read and writes times for the database. Which disk configuration should you use?

Answer: E

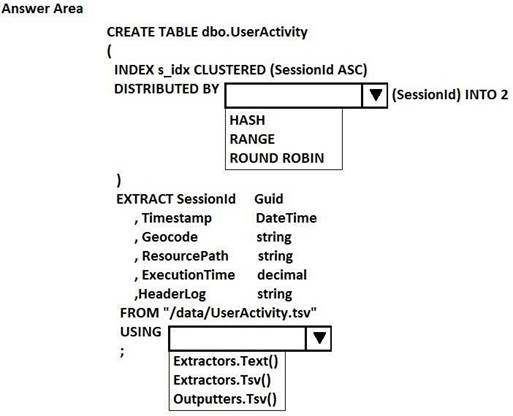

NEW QUESTION 7

HOTSPOT

You have a Microsoft Azure Data Lake Analytics service.

You have a tab-delimited file named UserActivity.tsv that contains logs of user sessions. The file does not have a header row.

You need to create a table and to load the logs to the table. The solution must distribute the data by a column named SessionId.

How should you complete the U-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

References:

https://msdn.microsoft.com/en-us/library/mt706197.aspx

NEW QUESTION 8

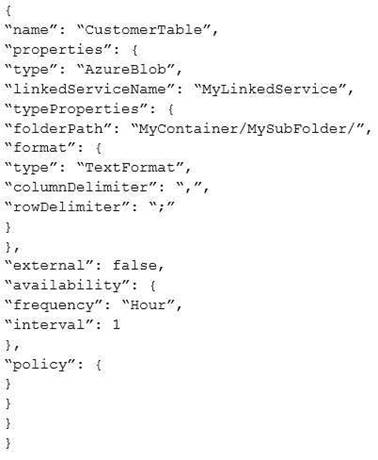

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are troubleshooting a slice in Microsoft Azure Data Factory for a dataset that has been in a waiting state for the last three days. The dataset should have been ready two days ago.

The dataset is being produced outside the scope of Azure Data Factory. The dataset is defined by using the following JSON code.

You need to modify the JSON code to ensure that the dataset is marked as ready whenever there is data in the data store.

Solution: You add a structure property to the dataset.

Does this meet the goal?

Answer: B

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/v1/data-factory-create-datasets

NEW QUESTION 9

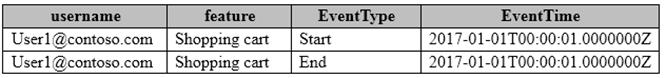

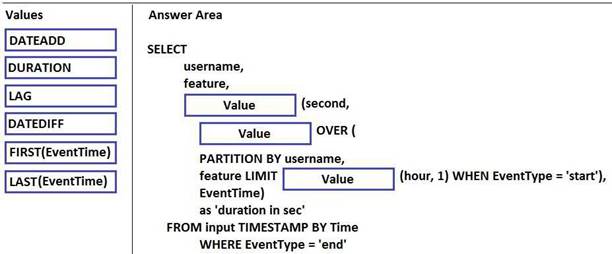

DRAG DROP

You have a Microsoft Azure Stream Analytics solution that captures website visits and user interactions on the website.

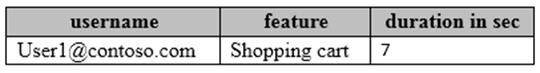

You have the sample input data described in the following table.

You have the sample output described in the following table.

How should you complete the script? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

References:

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-stream-analytics-query- patterns

NEW QUESTION 10

You have a Microsoft Azure Data Lake Analytics service. You plan to configure diagnostic logging.

You need to use Microsoft Operations Management Suite (OMS) to monitor the IP addresses that are used to access the Data Lake Store.

What should you do?

Answer: B

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-lake-analytics/data-lake-analytics-diagnostic-logs https://docs.microsoft.com/en-us/azure/security/azure-log-audit

NEW QUESTION 11

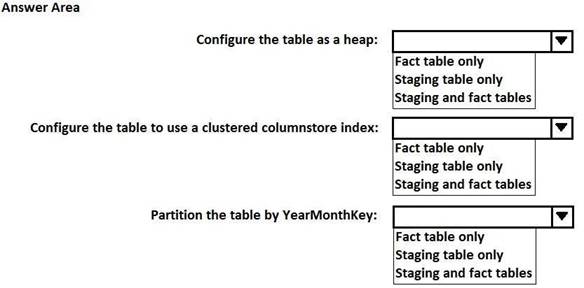

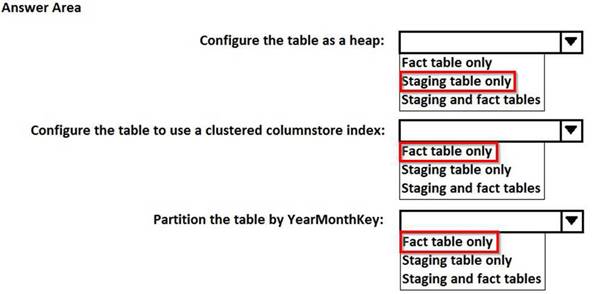

HOTSPOT

You are designing a fact table that has 100 million rows and 1,800 partitions. The partitions are defined based on a column named OrderDayKey. The fact table will contain:

Data from the last five years

A clustered columnstore index

A column named YearMonthKey that stores the year and the month

Multiple transformations will be performed on the fact table during the loading process. The fact table will be hash distributed on a column named OrderId.

You plan to load the data to a staging table and to perform transformations on the staging table. You will then load the data from the staging table to the final fact table.

You need to design a solution to load the data to the fact table. The solution must minimize how long it takes to perform the following tasks:

Load the staging table.

Transfer the data from the staging table to the fact table. Remove data that is older than five years.

Query the data in the fact table

How should you configure the tables? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 12

DRAG DROP

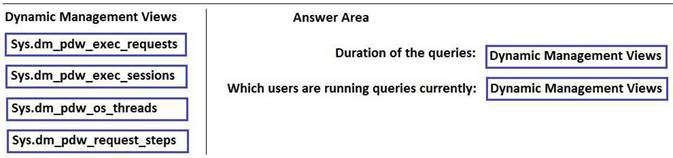

You have a Microsoft Azure SQL data warehouse.

Users discover that reports running in the data warehouse take longer than expected to complete. You need to review the duration of the queries and which users are running the queries currently. Which dynamic management view should you review for each requirement? To answer, drag the appropriate dynamic management views to the correct requirements. Each dynamic management view may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

References:

https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-pdw-exec-requests-transact-sql

https://docs.microsoft.com/en-us/sql/relational-databases/system-dynamic-management-views/sys-dm-pdw-exec-sessions-transact-sql

NEW QUESTION 13

You have sensor devices that report data to Microsoft Azure Stream Analytics. Each sensor reports data several times per second.

You need to create a live dashboard in Microsoft Power BI that shows the performance of the sensor devices. The solution must minimize lag when visualizing the data.

Which function should you use for the time-series data element?

Answer: D

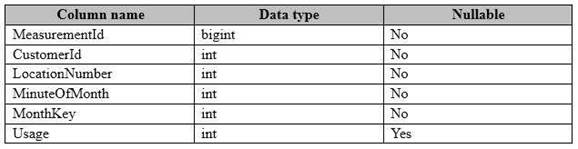

NEW QUESTION 14

You have a fact table named PowerUsage that has 10 billion rows. PowerUsage contains data about customer power usage during the last 12 months. The usage data is collected every minute. PowerUsage contains the columns configured as shown in the following table.

LocationNumber has a default value of 1. The MinuteOfMonth column contains the relative minute within each month. The value resets at the beginning of each month.

A sample of the fact table data is shown in the following table.

There is a related table named Customer that joins to the PowerUsage table on the CustomerId column. Sixty percent of the rows in PowerUsage are associated to less than 10 percent of the rows in Customer. Most queries do not require the use of the Customer table. Many queries select on a specific month.

You need to minimize how long it takes to find the records for a specific month. What should you do?

Answer: C

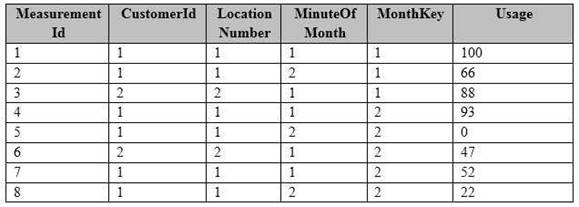

NEW QUESTION 15

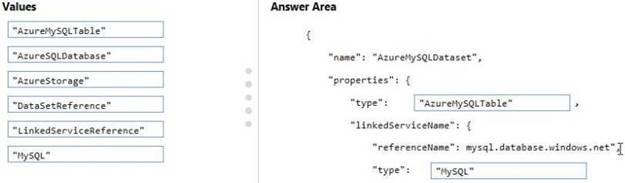

DRAG DROP

You need to create a linked service in Microsoft Azure Data Factory. The linked service must use an Azure Database for MySQL table named Customers.

How should you complete the JSON snippet? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 16

You have a Microsoft Azure Data Lake Analytics service.

You need to store a list of milltiple-character string values in a single column and to use a cross apply explode expression to output the values.

Which type of data should you use in a U-SQL query?

Answer: B

NEW QUESTION 17

Note: This question is part of a series of questions that present the same scenario. For your convenience, the scenario is repeated in each question. Each question presents a different goal and answer choices, but the text of the scenario is exactly the same in each question in this series.

Start of repeated scenario

You are migrating an existing on-premises data warehouse named LocalDW to Microsoft Azure. You will use an Azure SQL data warehouse named AzureDW for data storage and an Azure Data Factory named AzureDF for extract, transformation, and load (ETL) functions.

For each table in LocalDW, you create a table in AzureDW.

On the on-premises network, you have a Data Management Gateway.

Some source data is stored in Azure Blob storage. Some source data is stored on an on-premises Microsoft SQL Server instance. The instance has a table named Table1.

After data is processed by using AzureDF, the data must be archived and accessible forever. The archived data must meet a Service Level Agreement (SLA) for availability of 99 percent. If an Azure region fails, the archived data must be available for reading always.

End of repeated scenario.

You need to configure Azure Data Factory to connect to the on-premises SQL Server instance. What should you do first?

Answer: C

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/v1/data-factory-move-data-between-onprem- and-cloud

NEW QUESTION 18

Note: This question is part of a series of questions that present the same scenario. For your convenience, the scenario is repeated in each question. Each question presents a different goal and answer choices, but the text of the scenario is exactly the same in each question in this series.

Start of repeated scenario

You are migrating an existing on-premises data warehouse named LocalDW to Microsoft Azure. You will use an Azure SQL data warehouse named AzureDW for data storage and an Azure Data Factory named AzureDF for extract, transformation, and load (ETL) functions.

For each table in LocalDW, you create a table in AzureDW.

On the on-premises network, you have a Data Management Gateway.

Some source data is stored in Azure Blob storage. Some source data is stored on an on-premises Microsoft SQL Server instance. The instance has a table named Table1.

After data is processed by using AzureDF, the data must be archived and accessible forever. The archived data must meet a Service Level Agreement (SLA) for availability of 99 percent. If an Azure region fails, the archived data must be available for reading always. The storage solution for the archived data must minimize costs.

End of repeated scenario.

You need to define the schema of Table1 in AzureDF. What should you create?

Answer: C

P.S. Easily pass 70-776 Exam with 91 Q&As 2passeasy Dumps & pdf Version, Welcome to Download the Newest 2passeasy 70-776 Dumps: https://www.2passeasy.com/dumps/70-776/ (91 New Questions)