70-776 Exam Dumps for Microsoft certification, Real Success Guaranteed with Updated 70-776 Free Practice Questions. 100% PASS 70-776 Perform Big Data Engineering on Microsoft Cloud Services (beta) exam Today!

Also have 70-776 free dumps questions for you:

NEW QUESTION 1

You have a Microsoft Azure Data Factory that recently ran several activities in parallel. You receive alerts indicating that there are insufficient resources.

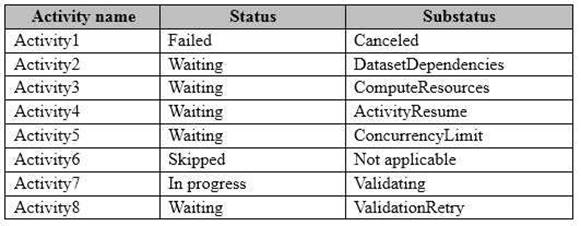

From the Activity Windows list in the Monitoring and Management app, you discover the statuses described in the following table.

Which activity cannot complete because of insufficient resources?

Answer: C

NEW QUESTION 2

You have a Microsoft Azure Data Lake Store that contains a folder named /Users/User1 and an Azure

Active Directory account named User1.

You need to provide access to the Data Lake Store to meet the following requirements:

• Grant User1 read and list access to /Users/User1.

• Prevent User1 from viewing the contents in /Users.

• Minimize the number of permissions granted to User1. What should you do?

Answer: D

NEW QUESTION 3

DRAG DROP

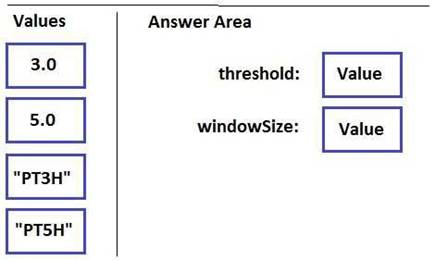

You plan to create for an alert for a Microsoft Azure Data Factory pipeline.

You need to configure the alert to trigger when the total number of failed runs exceeds five within a three-hour period.

How should you configure the window size and the threshold in the JSON file? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/v1/data-factory-monitor-manage-pipelines?view=powerbiapi-1.1.10

NEW QUESTION 4

You are implementing a solution by using Microsoft Azure Data Lake Analytics. You have a dataset that contains data-related to website visits.

You need to combine overlapping visits into a single entry based on the timestamp of the visits. Which type of U-SQL interface should you use?

Answer: C

NEW QUESTION 5

DRAG DROP

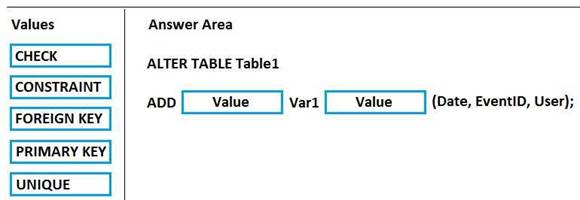

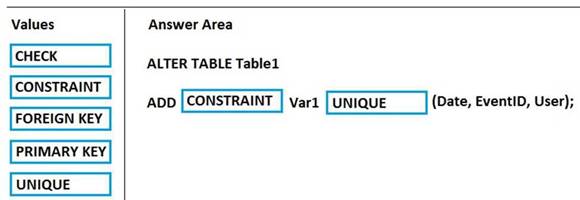

You use Microsoft Azure Stream Analytics to analyze data from an Azure event hub in real time and send the output to a table named Table1 in an Azure SQL database. Table1 has three columns named Date, EventID, and User.

You need to prevent duplicate data from being stored in the database.

How should you complete the statement? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 6

You have a Microsoft Azure SQL data warehouse. You have an Azure Data Lake Store that contains data from ORC, RC, Parquet, and delimited text files.

You need to load the data to the data warehouse in the least amount of time possible. Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer: DF

Explanation:

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store

NEW QUESTION 7

Note: This question is part of a series of questions that present the same scenario. Each question in

the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a table named Table1 that contains 3 billion rows. Table1 contains data from the last 36 months.

At the end of every month, the oldest month of data is removed based on a column named DateTime.

You need to minimize how long it takes to remove the oldest month of data. Solution: You specify DateTime as the hash distribution column.

Does this meet the goal?

Answer: B

NEW QUESTION 8

You have an on-premises Microsoft SQL Server instance.

You plan to copy a table from the instance to a Microsoft Azure Storage account. You need to ensure that you can copy the table by using Azure Data Factory. Which service should you deploy?

Answer: C

NEW QUESTION 9

Note: This question is part of a series of questions that present the same scenario. Each question in

the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a table named Table1 that contains 3 billion rows. Table1 contains data from the last 36 months.

At the end of every month, the oldest month of data is removed based on a column named DateTime.

You need to minimize how long it takes to remove the oldest month of data. Solution: You implement a columnstore index on the DateTime column. Does this meet the goal?

Answer: A

NEW QUESTION 10

You are developing an application that uses Microsoft Azure Stream Analytics.

You have data structures that are defined dynamically.

You want to enable consistency between the logical methods used by stream processing and batch processing.

You need to ensure that the data can be integrated by using consistent data points. What should you use to process the data?

Answer: D

NEW QUESTION 11

Note: This question is part of a series of questions that present the same scenario. Each question in

the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a table named Table1 that contains 3 billion rows. Table1 contains data from the last 36 months.

At the end of every month, the oldest month of data is removed based on a column named DateTime.

You need to minimize how long it takes to remove the oldest month of data. Solution: You implement round robin for table distribution.

Does this meet the goal?

Answer: B

NEW QUESTION 12

You ingest data into a Microsoft Azure event hub.

You need to export the data from the event hub to Azure Storage and to prepare the data for batch processing tasks in Azure Data Lake Analytics.

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer: BD

NEW QUESTION 13

You have a Microsoft Azure SQL data warehouse that has 10 compute nodes.

You need to export 10 TB of data from a data warehouse table to several new flat files in Azure Blob storage. The solution must maximize the use of the available compute nodes.

What should you do?

Answer: D

NEW QUESTION 14

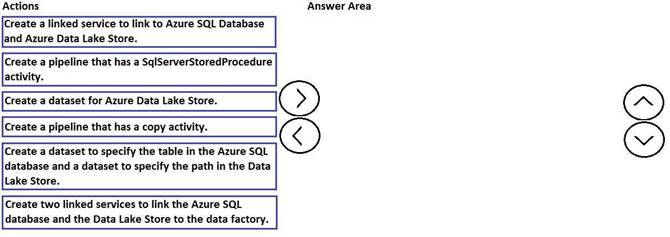

DRAG DROP

You need to copy data from Microsoft Azure SQL Database to Azure Data Lake Store by using Azure Data Factory.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/copy-activity-overview

NEW QUESTION 15

Note: This question is part of a series of questions that use the same scenario. For your convenience, the scenario is repeated in each question. Each question presents a different goal and answer choices, but the text of the scenario is exactly the same in each question in this series.

Start of repeated scenario

You are migrating an existing on-premises data warehouse named LocalDW to Microsoft Azure. You will use an Azure SQL data warehouse named AzureDW for data storage and an Azure Data Factory named AzureDF for extract transformation, and load (ETL) functions.

For each table in LocalDW, you create a table in AzureDW.

Answer: A

NEW QUESTION 16

You are using Cognitive capabilities in U-SQL to analyze images that contain different types of objects.

You need to identify which objects might be people.

Which two reference assemblies should you use? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer: BC

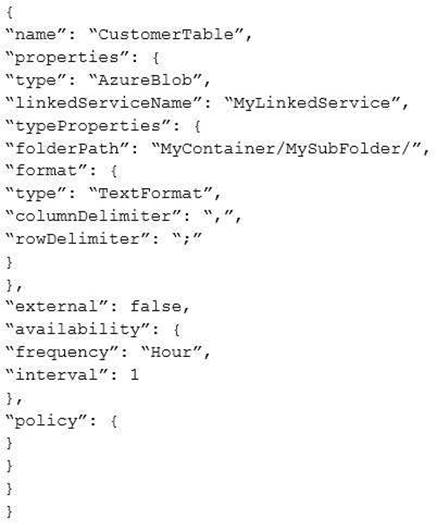

NEW QUESTION 17

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are troubleshooting a slice in Microsoft Azure Data Factory for a dataset that has been in a waiting state for the last three days. The dataset should have been ready two days ago.

The dataset is being produced outside the scope of Azure Data Factory. The dataset is defined by using the following JSON code.

You need to modify the JSON code to ensure that the dataset is marked as ready whenever there is data in the data store.

Solution: You change the interval to 24.

Does this meet the goal?

Answer: B

Explanation:

References:

https://docs.microsoft.com/en-us/azure/data-factory/v1/data-factory-create-datasets

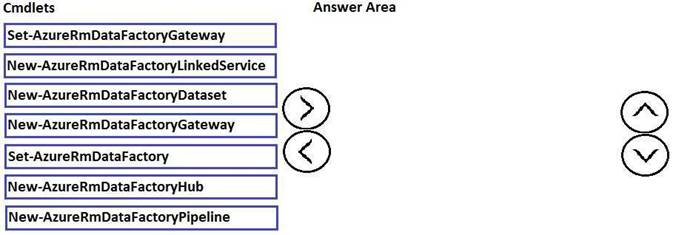

NEW QUESTION 18

DRAG DROP

You have an Apache Hive database in a Microsoft Azure HDInsight cluster. You create an Azure Data Factory named DF1.

You need to transform the data in the Hive database and to output the data to Azure Blob storage. Which three cmdlets should you run in sequence? To answer, move the appropriate cmdlets from the list of cmdlets to the answer area and arrange them in the correct order.

Answer:

Explanation:

References:

https://docs.microsoft.com/en-us/powershell/module/azurerm.datafactories/new-azurermdatafactorypipeline?view=azurermps-4.4.0

https://github.com/aelij/azure-content/blob/master/articles/data-factory/data-factory-build-your-first-pipeline-using-powershell.md

Recommend!! Get the Full 70-776 dumps in VCE and PDF From Certleader, Welcome to Download: https://www.certleader.com/70-776-dumps.html (New 91 Q&As Version)