- Home

- Cloudera

- CCA-505 Exam

Cloudera CCA-505 Free Practice Questions

Examcollection CCA-505 Questions are updated and all CCA-505 answers are verified by experts. Once you have completely prepared with our CCA-505 exam prep kits you will be ready for the real CCA-505 exam without a problem. We have Down to date Cloudera CCA-505 dumps study guide. PASSED CCA-505 First attempt! Here What I Did.

NEW QUESTION 1

Identify two features/issues that YARN is designed to address:

- A. Standardize on a single MapReduce API

- B. Single point of failure in the NameNode

- C. Reduce complexity of the MapReduce APIs

- D. Resource pressures on the JobTracker

- E. Ability to run frameworks other than MapReduce, such as MPI

- F. HDFS latency

Answer: DE

NEW QUESTION 2

You have a Hadoop cluster running HDFS, and a gateway machine external to the cluster from which clients submit jobs. What do you need to do in order to run on the cluster and

submit jobs from the command line of the gateway machine?

- A. Install the impslad daemon, statestored daemon, and catalogd daemon on each machine in the cluster and on the gateway node

- B. Install the impalad daemon on each machine in the cluster, the statestored daemon and catalogd daemon on one machine in the cluster, and the impala shell on your gateway machine

- C. Install the impalad daemon and the impala shell on your gateway machine, and the statestored daemon and catalog daemon on one of the nodes in the cluster

- D. Install the impalad daemon, the statestored daemon, the catalogd daemon, and the impala shell on your gateway machine

- E. Install the impalad daemon, statestored daemon, and catalogd daemon on each machine in the cluster, and the impala shell on your gateway machine

Answer: B

NEW QUESTION 3

What processes must you do if you are running a Hadoop cluster with a single NameNode and six DataNodes, and you want to change a configuration parameter so that it affects all six DataNodes.

- A. You must modify the configuration file on each of the six DataNode machines.

- B. You must restart the NameNode daemon to apply the changes to the cluster

- C. You must restart all six DatNode daemon to apply the changes to the cluste

- D. You don’t need to restart any daemon, as they will pick up changes automatically

- E. You must modify the configuration files on the NameNode onl

- F. DataNodes read their configuration from the master nodes.

Answer: BE

NEW QUESTION 4

Which YARN process runs as “controller O” of a submitted job and is responsible for resource requests?

- A. ResourceManager

- B. NodeManager

- C. JobHistoryServer

- D. ApplicationMaster

- E. JobTracker

- F. ApplicationManager

Answer: D

NEW QUESTION 5

You observe that the number of spilled records from Map tasks far exceeds the number of map output records. Your child heap size is 1GB and your io.sort.mb value is set to 100 MB. How would you tune your io.sort.mb value to achieve maximum memory to disk I/O ratio?

- A. Decrease the io.sort.mb value to 0

- B. Increase the io.sort.mb to 1GB

- C. For 1GB child heap size an io.sort.mb of 128 MB will always maximize memory to disk I/O

- D. Tune the io.sort.mb value until you observe that the number of spilled records equals (or is as close to equals) the number of map output records

Answer: D

NEW QUESTION 6

You are the hadoop fs –put command to add a file “sales.txt” to HDFS. This file is small enough that it fits into a single block, which is replicated to three nodes in your cluster (with a replication factor of 3). One of the nodes holding this file (a single block) fails. How will the cluster handle the replication of this file in this situation/

- A. The cluster will re-replicate the file the next time the system administrator reboots the NameNode daemon (as long as the file’s replication doesn’t fall two)

- B. This file will be immediately re-replicated and all other HDFS operations on the cluster will halt until the cluster’s replication values are restored

- C. The file will remain under-replicated until the administrator brings that nodes back online

- D. The file will be re-replicated automatically after the NameNode determines it is under replicated based on the block reports it receives from the DataNodes

Answer: B

NEW QUESTION 7

Assuming a cluster running HDFS, MapReduce version 2 (MRv2) on YARN with all settings at their default, what do you need to do when adding a new slave node to a cluster?

- A. Nothing, other than ensuring that DNS (or /etc/hosts files on all machines) contains am entry for the new node.

- B. Restart the NameNode and ResourceManager deamons and resubmit any running jobs

- C. Increase the value of dfs.number.of.needs in hdfs-site.xml

- D. Add a new entry to /etc/nodes on the NameNode host.

- E. Restart the NameNode daemon.

Answer: B

NEW QUESTION 8

You want a node to only swap Hadoop daemon data from RAM to disk when absolutely necessary. What should you do?

- A. Delete the /swapfile file on the node

- B. Set vm.swappiness to o in /etc/sysctl.conf

- C. Set the ram.swap parameter to o in core-site.xml

- D. Delete the /etc/swap file on the node

- E. Delete the /dev/vmswap file on the node

Answer: B

NEW QUESTION 9

On a cluster running MapReduce v2 (MRv2) on YARN, a MapReduce job is given a directory of 10 plain text as its input directory. Each file is made up of 3 HDFS blocks. How many Mappers will run?

- A. We cannot say; the number of Mappers is determined by the RsourceManager

- B. We cannot say; the number of Mappers is determined by the ApplicationManager

- C. We cannot say; the number of Mappers is determined by the developer

- D. 30

- E. 3

- F. 10

Answer: E

NEW QUESTION 10

Which three basic configuration parameters must you set to migrate your cluster from MapReduce1 (MRv1) to MapReduce v2 (MRv2)?

- A. Configure the NodeManager hostname and enable services on YARN by setting the following property in yarn-site.xml:<name>yarn.nodemanager.hostname</name><value>your_nodeManager_hostname</value>

- B. Configure the number of map tasks per job on YARN by setting the following property in mapred-site.xml:<name>mapreduce.job.maps</name><value>2</value>

- C. Configure MapReduce as a framework running on YARN by setting the following property in mapred-site.xml:<name>mapreduce.framework.name</name><value>yarn</value>

- D. Configure the ResourceManager hostname and enable node services on YARN by setting the following property in yarn-site.xml:<name>yarn.resourcemanager.hostname</name><value>your_responseManager_hostname</value>

- E. Configure a default scheduler to run on YARN by setting the following property in sapred-site.xml:<name>mapreduce.jobtracker.taskScheduler</name><value>org.apache.hadoop.mapred.JobQueueTaskScheduler</value>

- F. Configure the NodeManager to enable MapReduce services on YARN by adding following property in yarn-site.xml:<name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value>

Answer: ABD

NEW QUESTION 11

Your cluster is running MapReduce vserion 2 (MRv2) on YARN. Your ResourceManager is configured to use the FairScheduler. Now you want to configure your scheduler such that a new user on the cluster can submit jobs into their own queue application submission. Which configuration should you set?

- A. You can specify new queue name when user submits a job and new queue can be created dynamically if yarn.scheduler.fair.user-as-default-queue = false

- B. Yarn.scheduler.fair.user-as-default-queue = false and yarn.scheduler.fair.allow- undeclared-people = true

- C. You can specify new queue name per application in allocation.fair.allow-undeclared- people = true automatically assigned to the application queue

- D. You can specify new queue name when user submits a job and new queue can be created dynamically if the property yarn.scheduler.fair.allow-undecleared-pools = true

Answer: A

NEW QUESTION 12

You have converted your Hadoop cluster from a MapReduce 1 (MRv1) architecture to a MapReduce 2 (MRv2) on YARN architecture. Your developers are accustomed to specifying map and reduce tasks (resource allocation) tasks when they run jobs. A developer wants to know how specify to reduce tasks when a specific job runs. Which method should you tell that developer to implement?

- A. Developers specify reduce tasks in the exact same way for both MapReduce version 1 (MRv1) and MapReduce version 2 (MRv2) on YAR

- B. Thus, executing –p mapreduce.job.reduce-2 will specify 2 reduce tasks.

- C. In YARN, the ApplicationMaster is responsible for requesting the resources required for a specific jo

- D. Thus, executing –p yarn.applicationmaster.reduce.tasks-2 will specify that the ApplicationMaster launch two task containers on the worker nodes.

- E. In YARN, resource allocation is a function of megabytes of memory in multiple of 1024m

- F. Thus, they should specify the amount of memory resource they need by executing –D mapreduce.reduce.memory-mp-2040

- G. In YARN, resource allocation is a function of virtual cores specified by the ApplicationMaster making requests to the NodeManager where a reduce task is handled by a single container (and this a single virtual core). Thus, the developer needs to specify the number of virtual cores to the NodeManager by executing –p yarn.nodemanager.cpu- vcores=2

- H. MapReduce version 2 (MRv2) on YARN abstracts resource allocation away from the idea of “tasks” into memory and virtual cores, thus eliminating the need for a developer to specify the number of reduce tasks, and indeed preventing the developer from specifying the number of reduce tasks.

Answer: D

NEW QUESTION 13

Which YARN daemon or service negotiates map and reduce Containers from the Scheduler, tracking their status and monitoring for progress?

- A. ResourceManager

- B. ApplicationMaster

- C. NodeManager

- D. ApplicationManager

Answer: B

Explanation:

Reference: http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.1-latest/bk_using-apache-hadoop/content/yarn_overview.html

NEW QUESTION 14

You have installed a cluster running HDFS and MapReduce version 2 (MRv2) on YARN. You have no afs.hosts entry()ies in your hdfs-alte.xml configuration file. You configure a new worker node by setting fs.default.name in its configuration files to point to the NameNode on your cluster, and you start the DataNode daemon on that worker node.

What do you have to do on the cluster to allow the worker node to join, and start storing HDFS blocks?

- A. Nothing; the worker node will automatically join the cluster when the DataNode daemon is started.

- B. Without creating a dfs.hosts file or making any entries, run the command hadoop dfsadmin –refreshHadoop on the NameNode

- C. Create a dfs.hosts file on the NameNode, add the worker node’s name to it, then issue the command hadoop dfsadmin –refreshNodes on the NameNode

- D. Restart the NameNode

Answer: B

NEW QUESTION 15

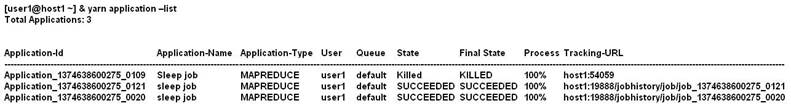

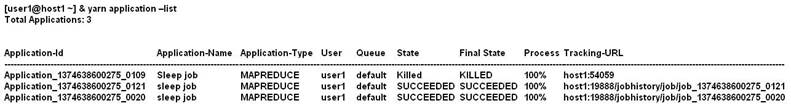

Given:

You want to clean up this list by removing jobs where the state is KILLED. What command you enter?

- A. Yarn application –kill application_1374638600275_0109

- B. Yarn rmadmin –refreshQueue

- C. Yarn application –refreshJobHistory

- D. Yarn rmadmin –kill application_1374638600275_0109

Answer: A

Explanation:

Reference: http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.1-latest/bk_using-apache-hadoop/content/common_mrv2_commands.html

NEW QUESTION 16

Which Yarn daemon or service monitors a Container’s per-application resource usage (e.g, memory, CPU)?

- A. NodeManager

- B. ApplicationMaster

- C. ApplicationManagerService

- D. ResourceManager

Answer: A

Explanation:

Reference: http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.0.0.2/bk_using-apache-hadoop/content/ch_using-apache-hadoop-4.html (4th para)

Recommend!! Get the Full CCA-505 dumps in VCE and PDF From prep-labs.com, Welcome to Download: https://www.prep-labs.com/dumps/CCA-505/ (New 45 Q&As Version)