It is impossible to pass Microsoft DP-200 exam without any help in the short term. Come to Examcollection soon and find the most advanced, correct and guaranteed Microsoft DP-200 practice questions. You will get a surprising result by our Renovate Implementing an Azure Data Solution practice guides.

Check DP-200 free dumps before getting the full version:

NEW QUESTION 1

You develop data engineering solutions for a company. The company has on-premises Microsoft SQL Server databases at multiple locations.

The company must integrate data with Microsoft Power BI and Microsoft Azure Logic Apps. The solution must avoid single points of failure during connection and transfer to the cloud. The solution must also minimize latency.

You need to secure the transfer of data between on-premises databases and Microsoft Azure.

What should you do?

Answer: D

Explanation:

You can create high availability clusters of On-premises data gateway installations, to ensure your organization can access on-premises data resources used in Power BI reports and dashboards. Such clusters allow gateway administrators to group gateways to avoid single points of failure in accessing on-premises data resources. The Power BI service always uses the primary gateway in the cluster, unless it’s not available. In that case, the service switches to the next gateway in the cluster, and so on.

References:

https://docs.microsoft.com/en-us/power-bi/service-gateway-high-availability-clusters

NEW QUESTION 2

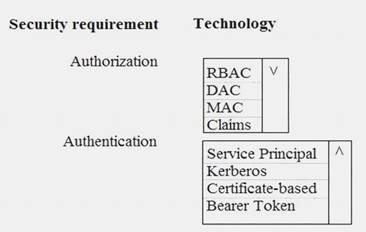

You need to ensure that Azure Data Factory pipelines can be deployed. How should you configure authentication and authorization for deployments? To answer, select the appropriate options in the answer choices.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

The way you control access to resources using RBAC is to create role assignments. This is a key concept to understand – it’s how permissions are enforced. A role assignment consists of three elements: security principal, role definition, and scope.

Scenario:

No credentials or secrets should be used during deployments

Phone-based poll data must only be uploaded by authorized users from authorized devices Contractors must not have access to any polling data other than their own

Access to polling data must set on a per-active directory user basis References:

https://docs.microsoft.com/en-us/azure/role-based-access-control/overview

NEW QUESTION 3

A company plans to use Azure Storage for file storage purposes. Compliance rules require: A single storage account to store all operations including reads, writes and deletes

Retention of an on-premises copy of historical operations You need to configure the storage account.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer: AB

Explanation:

Storage Logging logs request data in a set of blobs in a blob container named $logs in your storage account. This container does not show up if you list all the blob containers in your account but you can see its contents if you access it directly.

To view and analyze your log data, you should download the blobs that contain the log data you are interested in to a local machine. Many storage-browsing tools enable you to download blobs from your storage account; you can also use the Azure Storage team provided command-line Azure Copy Tool (AzCopy) to download your log data.

References:

https://docs.microsoft.com/en-us/rest/api/storageservices/enabling-storage-logging-and-accessing-log-data

NEW QUESTION 4

Contoso, Ltd. plans to configure existing applications to use Azure SQL Database. When security-related operations occur, the security team must be informed. You need to configure Azure Monitor while minimizing administrative efforts

Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

Answer: ACE

NEW QUESTION 5

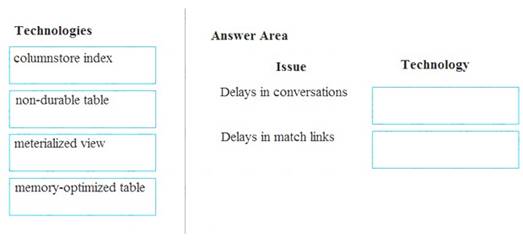

A company builds an application to allow developers to share and compare code. The conversations, code snippets, and links shared by people in the application are stored in a Microsoft Azure SQL Database instance. The application allows for searches of historical conversations and code snippets.

When users share code snippets, the code snippet is compared against previously share code snippets by using a combination of Transact-SQL functions including SUBSTRING, FIRST_VALUE, and SQRT. If a match is found, a link to the match is added to the conversation.

Customers report the following issues: Delays occur during live conversations

Delays occur during live conversations A delay occurs before matching links appear after code snippets are added to conversations

A delay occurs before matching links appear after code snippets are added to conversations

You need to resolve the performance issues.

Which technologies should you use? To answer, drag the appropriate technologies to the correct issues. Each technology may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

Box 1: memory-optimized table

In-Memory OLTP can provide great performance benefits for transaction processing, data ingestion, and transient data scenarios.

Box 2: materialized view

To support efficient querying, a common solution is to generate, in advance, a view that materializes the data in a format suited to the required results set. The Materialized View pattern describes generating prepopulated views of data in environments where the source data isn't in a suitable format for querying, where generating a suitable query is difficult, or where query performance is poor due to the nature of the data or the data store.

These materialized views, which only contain data required by a query, allow applications to quickly obtain the information they need. In addition to joining tables or combining data entities, materialized views can include the current values of calculated columns or data items, the results of combining values or executing transformations on the data items, and values specified as part of the query. A materialized view can even be optimized for just a single query.

References:

https://docs.microsoft.com/en-us/azure/architecture/patterns/materialized-view

NEW QUESTION 6

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be ingested resides in parquet files stored in an Azure Data lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1. Create an external data source pointing to the Azure storage account

2. Create a workload group using the Azure storage account name as the pool name

3. Load the data using the INSERT…SELECT statement

Does the solution meet the goal?

Answer: B

Explanation:

You need to create an external file format and external table using the external data source. You then load the data using the CREATE TABLE AS SELECT statement.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store

NEW QUESTION 7

A company runs Microsoft SQL Server in an on-premises virtual machine (VM).

You must migrate the database to Azure SQL Database. You synchronize users from Active Directory to Azure Active Directory (Azure AD).

You need to configure Azure SQL Database to use an Azure AD user as administrator. What should you configure?

Answer: A

NEW QUESTION 8

You develop data engineering solutions for a company.

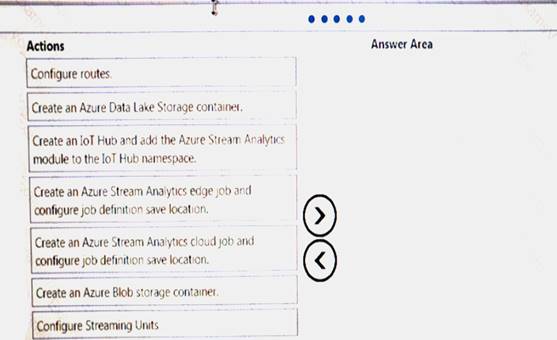

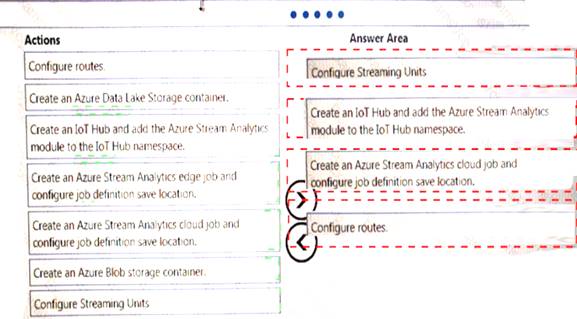

You need to deploy a Microsoft Azure Stream Analytics job for an IoT solution. The solution must:

• Minimize latency.

• Minimize bandwidth usage between the job and IoT device.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer: A

Explanation:

NEW QUESTION 9

You plan to use Microsoft Azure SQL Database instances with strict user access control. A user object must:  Move with the database if it is run elsewhere

Move with the database if it is run elsewhere Be able to create additional users

Be able to create additional users

You need to create the user object with correct permissions.

Which two Transact-SQL commands should you run? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer: CD

Explanation:

C: ALTER ROLE adds or removes members to or from a database role, or changes the name of a user-defined database role.

Members of the db_owner fixed database role can perform all configuration and maintenance activities on the database, and can also drop the database in SQL Server.

D: CREATE USER adds a user to the current database.

Note: Logins are created at the server level, while users are created at the database level. In other words, a login allows you to connect to the SQL Server service (also called an instance), and permissions inside the database are granted to the database users, not the logins. The logins will be assigned to server roles (for example, serveradmin) and the database users will be assigned to roles within that database (eg. db_datareader, db_bckupoperator).

References:

https://docs.microsoft.com/en-us/sql/t-sql/statements/alter-role-transact-sql https://docs.microsoft.com/en-us/sql/t-sql/statements/create-user-transact-sql

NEW QUESTION 10

A company plans to develop solutions to perform batch processing of multiple sets of geospatial data. You need to implement the solutions.

Which Azure services should you use? To answer, select the appropriate configuration tit the answer area. NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

NEW QUESTION 11

You manage a financial computation data analysis process. Microsoft Azure virtual machines (VMs) run the process in daily jobs, and store the results in virtual hard drives (VHDs.)

The VMs product results using data from the previous day and store the results in a snapshot of the VHD. When a new month begins, a process creates a new VHD.

You must implement the following data retention requirements:  Daily results must be kept for 90 days

Daily results must be kept for 90 days Data for the current year must be available for weekly reports

Data for the current year must be available for weekly reports Data from the previous 10 years must be stored for auditing purposes

Data from the previous 10 years must be stored for auditing purposes Data required for an audit must be produced within 10 days of a request. You need to enforce the data retention requirements while minimizing cost.

Data required for an audit must be produced within 10 days of a request. You need to enforce the data retention requirements while minimizing cost.

How should you configure the lifecycle policy? To answer, drag the appropriate JSON segments to the correct locations. Each JSON segment may be used once, more than once, or not at all. You may need to drag the split bat between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

The Set-AzStorageAccountManagementPolicy cmdlet creates or modifies the management policy of an Azure Storage account.

Example: Create or update the management policy of a Storage account with ManagementPolicy rule objects.

Action -BaseBlobAction Delete -daysAfterModificationGreaterThan 100

PS C:>$action1 = Add-AzStorageAccountManagementPolicyAction -InputObject $action1 -BaseBlobAction TierToArchive -daysAfterModificationGreaterThan 50

PS C:>$action1 = Add-AzStorageAccountManagementPolicyAction -InputObject $action1 -BaseBlobAction TierToCool -daysAfterModificationGreaterThan 30

PS C:>$action1 = Add-AzStorageAccountManagementPolicyAction -InputObject $action1 -SnapshotAction Delete -daysAfterCreationGreaterThan 100

PS C:>$filter1 = New-AzStorageAccountManagementPolicyFilter -PrefixMatch ab,cd

PS C:>$rule1 = New-AzStorageAccountManagementPolicyRule -Name Test -Action $action1 -Filter $filter1

PS C:>$action2 = Add-AzStorageAccountManagementPolicyAction -BaseBlobAction Delete

-daysAfterModificationGreaterThan 100

PS C:>$filter2 = New-AzStorageAccountManagementPolicyFilter References:

https://docs.microsoft.com/en-us/powershell/module/az.storage/set-azstorageaccountmanagementpolicy

NEW QUESTION 12

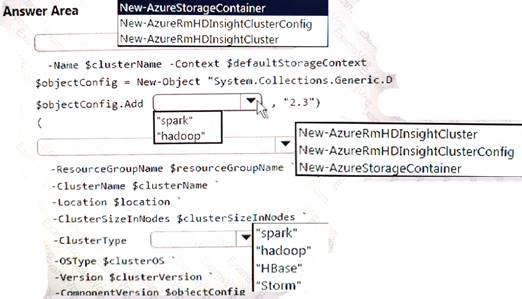

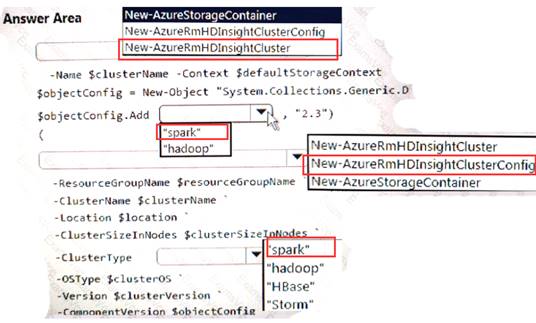

You develop data engineering solutions for a company.

A project requires an in-memory batch data processing solution.

You need to provision an HDInsight cluster for batch processing of data on Microsoft Azure.

How should you complete the PowerShell segment? To answer, select the appropriate option in the answer area.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

NEW QUESTION 13

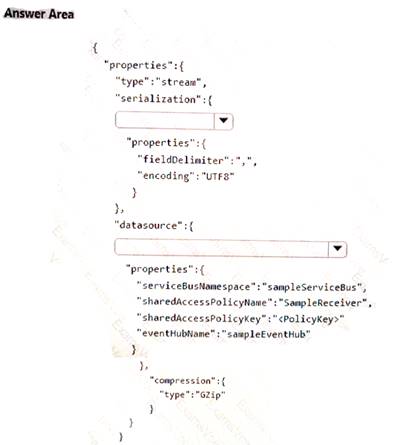

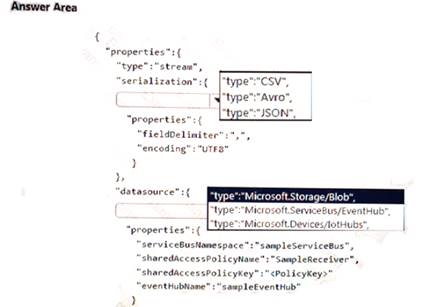

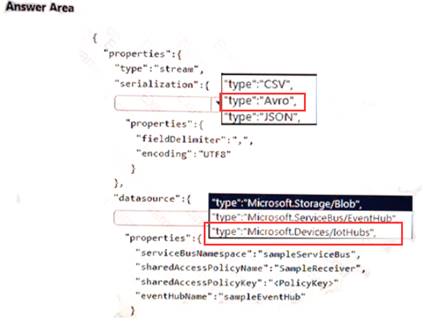

A company plans to analyze a continuous flow of data from a social media platform by using Microsoft Azure Stream Analytics. The incoming data is formatted as one record per row.

You need to create the input stream.

How should you complete the REST API segment? To answer, select the appropriate configuration in the answer area.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

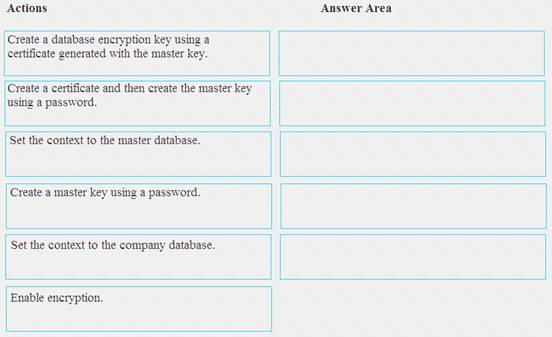

NEW QUESTION 14

You manage security for a database that supports a line of business application. Private and personal data stored in the database must be protected and encrypted. You need to configure the database to use Transparent Data Encryption (TDE).

Which five actions should you perform in sequence? To answer, select the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer: A

Explanation:

Step 1: Create a master key

Step 2: Create or obtain a certificate protected by the master key Step 3: Set the context to the company database

Step 4: Create a database encryption key and protect it by the certificate Step 5: Set the database to use encryption

Example code: USE master; GO

CREATE MASTER KEY ENCRYPTION BY PASSWORD = '<UseStrongPasswordHere>';

go

CREATE CERTIFICATE MyServerCert WITH SUBJECT = 'My DEK Certificate'; go

USE AdventureWorks2012; GO

CREATE DATABASE ENCRYPTION KEY WITH ALGORITHM = AES_128

ENCRYPTION BY SERVER CERTIFICATE MyServerCert; GO

ALTER DATABASE AdventureWorks2012 SET ENCRYPTION ON;

GO

References:

https://docs.microsoft.com/en-us/sql/relational-databases/security/encryption/transparent-data-encryption

NEW QUESTION 15

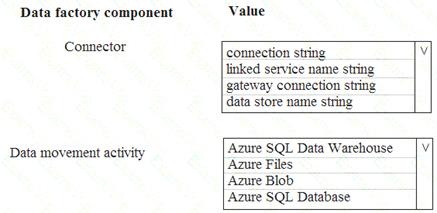

You need set up the Azure Data Factory JSON definition for Tier 10 data.

What should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

Box 1: Connection String

To use storage account key authentication, you use the ConnectionString property, which xpecify the information needed to connect to Blobl Storage.

Mark this field as a SecureString to store it securely in Data Factory. You can also put account key in Azure Key Vault and pull the accountKey configuration out of the connection string.

Box 2: Azure Blob

Tier 10 reporting data must be stored in Azure Blobs

References:

https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-blob-storage

NEW QUESTION 16

Note: This question is part of series of questions that present the same scenario. Each question in the series contain a unique solution. Determine whether the solution meets the stated goals.

You develop a data ingestion process that will import data to a Microsoft Azure SQL Data Warehouse. The data to be ingested resides in parquet files stored in an Azure Data Lake Gen 2 storage account.

You need to load the data from the Azure Data Lake Gen 2 storage account into the Azure SQL Data Warehouse.

Solution:

1. Use Azure Data Factory to convert the parquet files to CSV files

2. Create an external data source pointing to the Azure storage account

3. Create an external file format and external table using the external data source

4. Load the data using the INSERT…SELECT statement Does the solution meet the goal?

Answer: B

Explanation:

There is no need to convert the parquet files to CSV files.

You load the data using the CREATE TABLE AS SELECT statement. References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-load-from-azure-data-lake-store

NEW QUESTION 17

You develop data engineering solutions for a company.

You need to ingest and visualize real-time Twitter data by using Microsoft Azure.

Which three technologies should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer: BDF

Explanation:

You can use Azure Logic apps to send tweets to an event hub and then use a Stream Analytics job to read from event hub and send them to PowerBI.

References:

https://community.powerbi.com/t5/Integrations-with-Files-and/Twitter-streaming-analytics-step-by-step/td-p/95

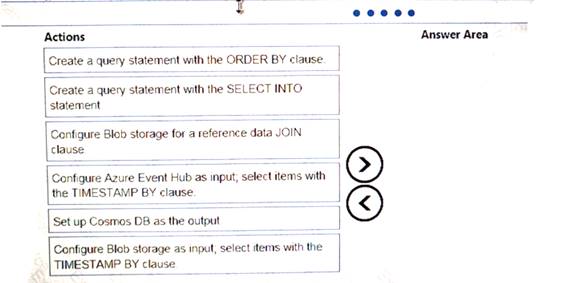

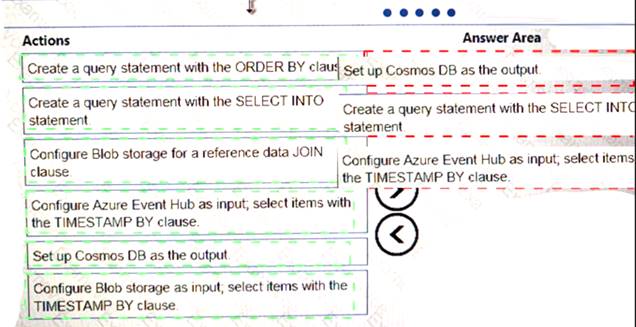

NEW QUESTION 18

You implement an event processing solution using Microsoft Azure Stream Analytics. The solution must meet the following requirements:

•Ingest data from Blob storage

• Analyze data in real time

•Store processed data in Azure Cosmos DB

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer: A

Explanation:

NEW QUESTION 19

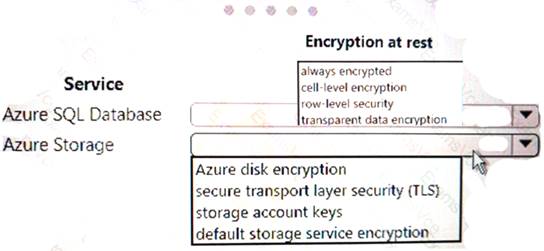

Your company uses Azure SQL Database and Azure Blob storage.

All data at rest must be encrypted by using the company's own key. The solution must minimize administrative effort and the impact to applications which use the database.

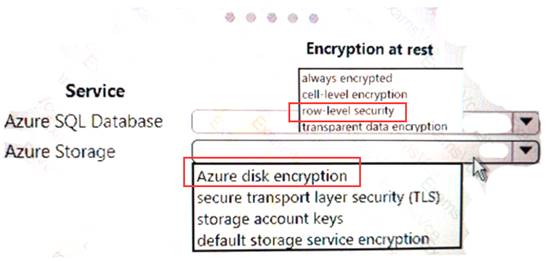

You need to configure security.

What should you implement? To answer, select the appropriate option in the answer area. NOTE: Each correct selection is worth one point.

Answer: A

Explanation:

NEW QUESTION 20

You are the data engineer tor your company. An application uses a NoSQL database to store data. The database uses the key-value and wide-column NoSQL database type.

Developers need to access data in the database using an API.

You need to determine which API to use for the database model and type.

Which two APIs should you use? Each correct answer presents a complete solution. NOTE: Each correct selection s worth one point.

Answer: BE

Explanation:

B: Azure Cosmos DB is the globally distributed, multimodel database service from Microsoft for mission-critical applications. It is a multimodel database and supports document, key-value, graph, and columnar data models.

E: Wide-column stores store data together as columns instead of rows and are optimized for queries over large datasets. The most popular are Cassandra and HBase.

References:

https://docs.microsoft.com/en-us/azure/cosmos-db/graph-introduction https://www.mongodb.com/scale/types-of-nosql-databases

NEW QUESTION 21

A company is designing a hybrid solution to synchronize data and on-premises Microsoft SQL Server database to Azure SQL Database.

You must perform an assessment of databases to determine whether data will move without compatibility issues.

You need to perform the assessment. Which tool should you use?

Answer: E

Explanation:

The Data Migration Assistant (DMA) helps you upgrade to a modern data platform by detecting compatibility issues that can impact database functionality in your new version of SQL Server or Azure SQL Database. DMA recommends performance and reliability improvements for your target environment and allows you to move your schema, data, and uncontained objects from your source server to your target server.

References:

https://docs.microsoft.com/en-us/sql/dma/dma-overview

NEW QUESTION 22

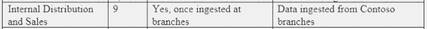

You need to process and query ingested Tier 9 data.

Which two options should you use? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

Answer: EF

Explanation:

Event Hubs provides a Kafka endpoint that can be used by your existing Kafka based applications as an alternative to running your own Kafka cluster.

You can stream data into Kafka-enabled Event Hubs and process it with Azure Stream Analytics, in the following steps: Create a Kafka enabled Event Hubs namespace.

Create a Kafka enabled Event Hubs namespace. Create a Kafka client that sends messages to the event hub.

Create a Kafka client that sends messages to the event hub. Create a Stream Analytics job that copies data from the event hub into an Azure blob storage. Scenario:

Create a Stream Analytics job that copies data from the event hub into an Azure blob storage. Scenario:

Tier 9 reporting must be moved to Event Hubs, queried, and persisted in the same Azure region as the company’s main office

References:

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-kafka-stream-analytics

NEW QUESTION 23

......

P.S. Simply pass now are offering 100% pass ensure DP-200 dumps! All DP-200 exam questions have been updated with correct answers: https://www.simply-pass.com/Microsoft-exam/DP-200-dumps.html (88 New Questions)