Master the MLS-C01 AWS Certified Machine Learning - Specialty content and be ready for exam day success quickly with this Pass4sure MLS-C01 book. We guarantee it!We make it a reality and give you real MLS-C01 questions in our Amazon-Web-Services MLS-C01 braindumps.Latest 100% VALID Amazon-Web-Services MLS-C01 Exam Questions Dumps at below page. You can use our Amazon-Web-Services MLS-C01 braindumps and pass your exam.

Free demo questions for Amazon-Web-Services MLS-C01 Exam Dumps Below:

NEW QUESTION 1

When submitting Amazon SageMaker training jobs using one of the built-in algorithms, which common parameters MUST be specified? (Select THREE.)

Answer: AEF

NEW QUESTION 2

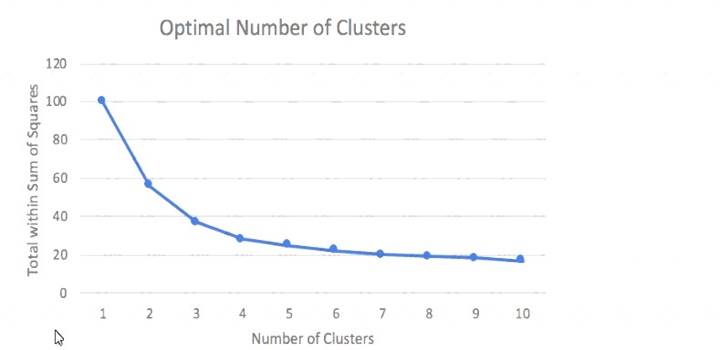

A Machine Learning Specialist prepared the following graph displaying the results of k-means for k = [1:10]

Considering the graph, what is a reasonable selection for the optimal choice of k?

Answer: C

NEW QUESTION 3

A Data Scientist wants to gain real-time insights into a data stream of GZIP files. Which solution would allow the use of SQL to query the stream with the LEAST latency?

Answer: A

NEW QUESTION 4

A Machine Learning Specialist works for a credit card processing company and needs to predict which transactions may be fraudulent in near-real time. Specifically, the Specialist must train a model that returns the probability that a given transaction may be fraudulent

How should the Specialist frame this business problem'?

Answer: A

NEW QUESTION 5

A Machine Learning Specialist is building a supervised model that will evaluate customers' satisfaction with their mobile phone service based on recent usage The model's output should infer whether or not a customer is likely to switch to a competitor in the next 30 days

Which of the following modeling techniques should the Specialist use1?

Answer: D

NEW QUESTION 6

Example Corp has an annual sale event from October to December. The company has sequential sales data from the past 15 years and wants to use Amazon ML to predict the sales for this year's upcoming event. Which method should Example Corp use to split the data into a training dataset and evaluation dataset?

Answer: C

NEW QUESTION 7

A Machine Learning Specialist has built a model using Amazon SageMaker built-in algorithms and is not getting expected accurate results The Specialist wants to use hyperparameter optimization to increase the model's accuracy

Which method is the MOST repeatable and requires the LEAST amount of effort to achieve this?

Answer: B

NEW QUESTION 8

A Data Scientist needs to create a serverless ingestion and analytics solution for high-velocity, real-time streaming data.

The ingestion process must buffer and convert incoming records from JSON to a query-optimized, columnar format without data loss. The output datastore must be highly available, and Analysts must be able to run SQL queries against the data and connect to existing business intelligence dashboards.

Which solution should the Data Scientist build to satisfy the requirements?

Answer: A

NEW QUESTION 9

During mini-batch training of a neural network for a classification problem, a Data Scientist notices that training accuracy oscillates What is the MOST likely cause of this issue?

Answer: D

NEW QUESTION 10

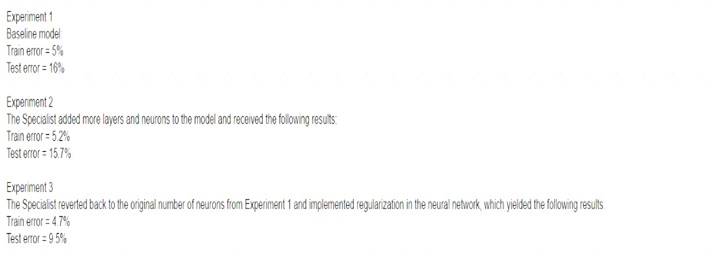

An Machine Learning Specialist discover the following statistics while experimenting on a model.

What can the Specialist from the experiments?

Answer: C

NEW QUESTION 11

A Machine Learning Specialist is creating a new natural language processing application that processes a dataset comprised of 1 million sentences The aim is to then run Word2Vec to generate embeddings of the sentences and enable different types of predictions

Here is an example from the dataset

"The quck BROWN FOX jumps over the lazy dog "

Which of the following are the operations the Specialist needs to perform to correctly sanitize and prepare the data in a repeatable manner? (Select THREE)

Answer: ABD

NEW QUESTION 12

A company has raw user and transaction data stored in AmazonS3 a MySQL database, and Amazon RedShift A Data Scientist needs to perform an analysis by joining the three datasets from Amazon S3, MySQL, and Amazon RedShift, and then calculating the average-of a few selected columns from the joined data

Which AWS service should the Data Scientist use?

Answer: A

NEW QUESTION 13

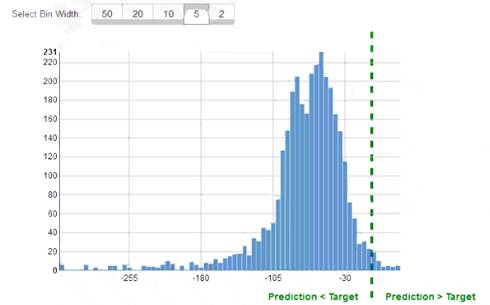

While reviewing the histogram for residuals on regression evaluation data a Machine Learning Specialist notices that the residuals do not form a zero-centered bell shape as shown What does this mean?

Answer: D

NEW QUESTION 14

A company is running an Amazon SageMaker training job that will access data stored in its Amazon S3 bucket A compliance policy requires that the data never be transmitted across the internet How should the company set up the job?

Answer: D

NEW QUESTION 15

A retail company intends to use machine learning to categorize new products A labeled dataset of current products was provided to the Data Science team The dataset includes 1 200 products The labeled dataset has 15 features for each product such as title dimensions, weight, and price Each product is labeled as belonging to one of six categories such as books, games, electronics, and movies.

Which model should be used for categorizing new products using the provided dataset for training?

Answer: B

NEW QUESTION 16

A Machine Learning Specialist has completed a proof of concept for a company using a small data sample and now the Specialist is ready to implement an end-to-end solution in AWS using Amazon SageMaker The historical training data is stored in Amazon RDS

Which approach should the Specialist use for training a model using that data?

Answer: B

NEW QUESTION 17

A Machine Learning Specialist trained a regression model, but the first iteration needs optimizing. The Specialist needs to understand whether the model is more frequently overestimating or underestimating the

target.

What option can the Specialist use to determine whether it is overestimating or underestimating the target value?

Answer: C

NEW QUESTION 18

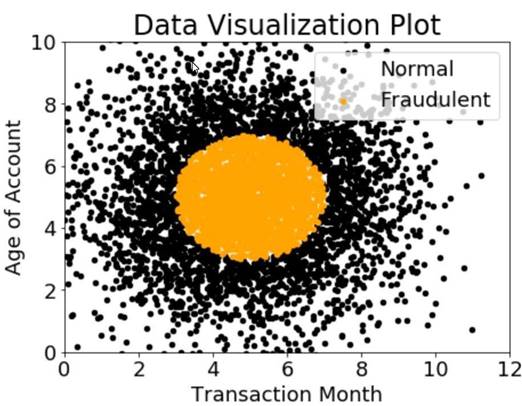

A company wants to classify user behavior as either fraudulent or normal. Based on internal research, a Machine Learning Specialist would like to build a binary classifier based on two features: age of account and transaction month. The class distribution for these features is illustrated in the figure provided.

Based on this information which model would have the HIGHEST accuracy?

Answer: B

NEW QUESTION 19

A Machine Learning Specialist is implementing a full Bayesian network on a dataset that describes public transit in New York City. One of the random variables is discrete, and represents the number of minutes New Yorkers wait for a bus given that the buses cycle every 10 minutes, with a mean of 3 minutes.

Which prior probability distribution should the ML Specialist use for this variable?

Answer: D

NEW QUESTION 20

A Machine Learning Specialist is working with multiple data sources containing billions of records that need to be joined. What feature engineering and model development approach should the Specialist take with a dataset this large?

Answer: B

NEW QUESTION 21

A large consumer goods manufacturer has the following products on sale

• 34 different toothpaste variants

• 48 different toothbrush variants

• 43 different mouthwash variants

The entire sales history of all these products is available in Amazon S3 Currently, the company is using custom-built autoregressive integrated moving average (ARIMA) models to forecast demand for these products The company wants to predict the demand for a new product that will soon be launched

Which solution should a Machine Learning Specialist apply?

Answer: B

Explanation:

The Amazon SageMaker DeepAR forecasting algorithm is a supervised learning algorithm for forecasting scalar (one-dimensional) time series using recurrent neural networks (RNN). Classical forecasting methods, such as autoregressive integrated moving average (ARIMA) or exponential smoothing (ETS), fit a single model to each individual time series. They then use that model to extrapolate the time series into the future.

NEW QUESTION 22

A Data Science team is designing a dataset repository where it will store a large amount of training data commonly used in its machine learning models. As Data Scientists may create an arbitrary number of new datasets every day the solution has to scale automatically and be cost-effective. Also, it must be possible to explore the data using SQL.

Which storage scheme is MOST adapted to this scenario?

Answer: A

NEW QUESTION 23

A Machine Learning Specialist is building a model that will perform time series forecasting using Amazon SageMaker The Specialist has finished training the model and is now planning to perform load testing on the endpoint so they can configure Auto Scaling for the model variant

Which approach will allow the Specialist to review the latency, memory utilization, and CPU utilization during the load test"?

Answer: B

NEW QUESTION 24

A Machine Learning Specialist working for an online fashion company wants to build a data ingestion solution for the company's Amazon S3-based data lake.

The Specialist wants to create a set of ingestion mechanisms that will enable future capabilities comprised of:

• Real-time analytics

• Interactive analytics of historical data

• Clickstream analytics

• Product recommendations

Which services should the Specialist use?

Answer: A

NEW QUESTION 25

......

Recommend!! Get the Full MLS-C01 dumps in VCE and PDF From DumpSolutions.com, Welcome to Download: https://www.dumpsolutions.com/MLS-C01-dumps/ (New 105 Q&As Version)